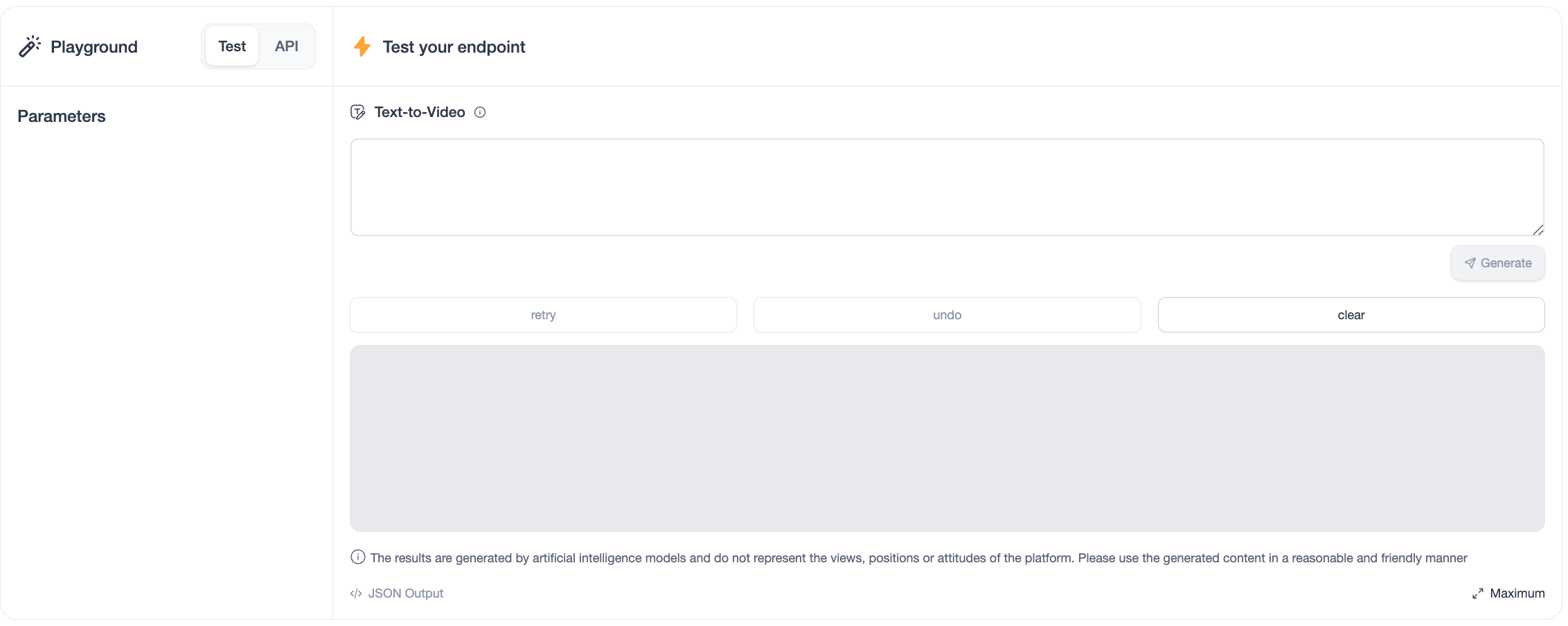

Text To Video

1. What Is a Text-to-Video Task?

Text-to-Video Generation is a task where large-scale models generate video content that matches a given textual prompt through model inference. This task combines natural language understanding, computer vision, and temporal modeling, enabling the model to not only generate individual frames but also capture motion, camera transitions, and temporal consistency across a sequence. It is widely used in creative content production, film and animation workflows, advertising, and marketing scenarios.

2. Typical Application Scenarios

- Creative Content Creation: Generate short videos, concept animations, or artistic visuals based on textual descriptions.

- Advertising and Marketing: Rapidly produce product showcase videos, brand promotional clips, or social media short videos.

- Film and Animation Production: Generate storyboard previews, scene drafts, or animation clips to support early-stage production.

- Game Development: Create character motion demonstrations, world-building scene videos, or cinematic cutscene references.

- Education and Research: Generate dynamic visualizations for instructional demonstrations or research presentations.

3. Key Factors Affecting Generation Quality

Model Selection

- Different text-to-video models vary in terms of visual quality, temporal consistency, motion coherence, and style stability. The appropriate model should be selected based on the target video duration, resolution, and content complexity.

4.Code Example

import requests

import json

import time

url = "https://xxxxxx.opencsg-stg.com/v1/tasks/video/"

headers = {

'Content-Type': 'application/json'

}

data = {

"prompt": "your prompt",

"negative_prompt": "",

"parameters": {}

}

response = requests.post(url, json=data, headers=headers)

response.raise_for_status()