Text To Image

1. What is Text-to-Image Generation Task?

Text-to-Image Generation is a task where large models infer and generate images that align with the descriptions provided in text prompts. This task combines natural language processing with computer vision technology and is widely applied in various fields such as creative design, advertising production, and game development.

2. Typical Use Cases

- Creative Design: Generate unique images based on descriptions, such as illustrations, artworks, posters, etc.

- Advertising Production: Quickly create marketing images that match brand features, enhancing design efficiency.

- Product Prototyping: Assist industrial design by generating product styles or concept images.

- Entertainment Content: Generate animation characters or scene designs to support film and game production.

- Education and Research: Create visualized image content to assist teaching or research papers.

3. Key Factors Impacting Generation Quality

Model Selection

Different models vary in image detail, style consistency, and other aspects. The choice of model should be determined based on actual needs.

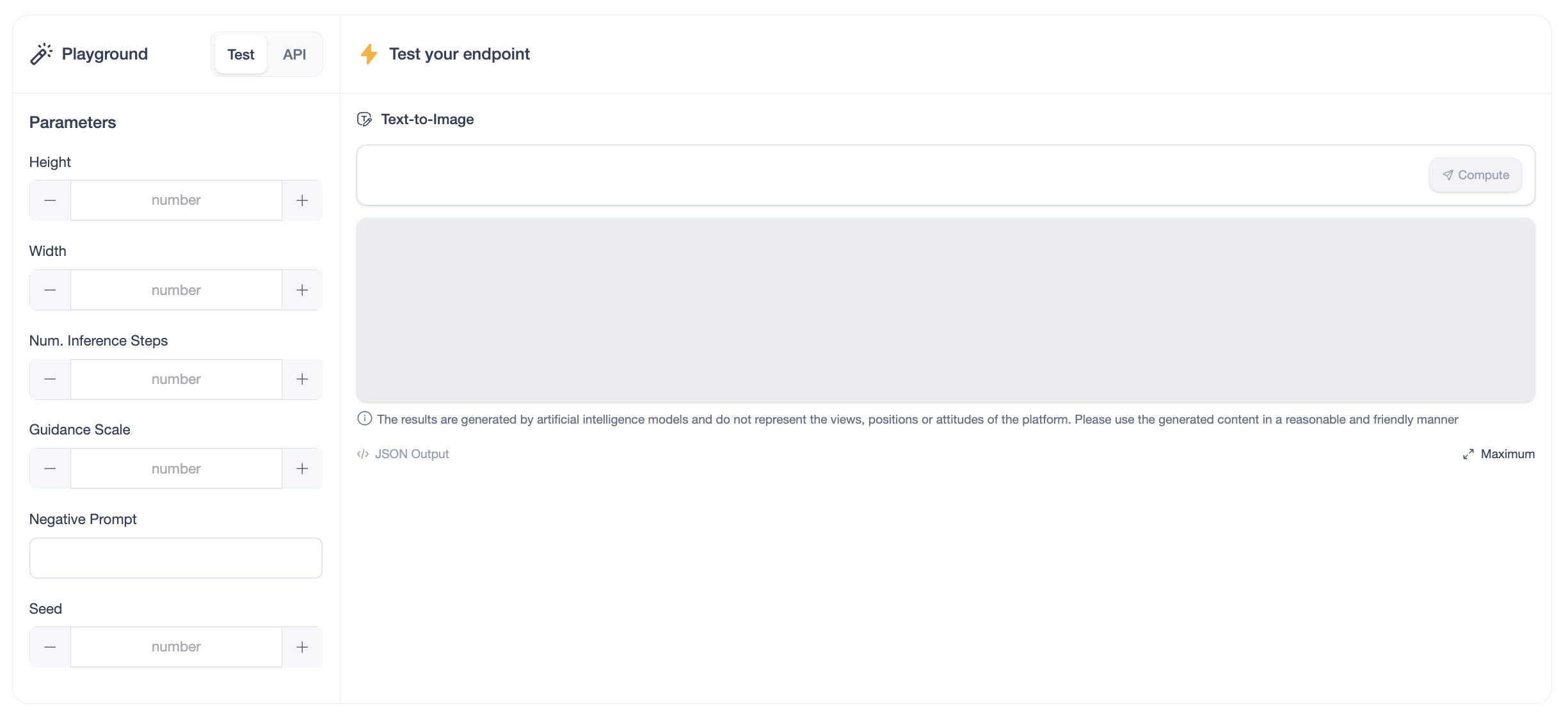

Parameter Adjustments

Below are the key parameters affecting text-to-image generation quality:

Height and Width

- The size of the image, defined in pixels for height and width respectively.

- Use Cases: Customize image proportions for different scenarios, such as vertical images for mobile devices or horizontal banners for web pages.

- Note: Excessively high resolution may increase generation time and resource consumption.

Number of Inference Steps

- Determines the number of iterative optimizations the model performs when generating an image. A higher number of steps typically improves image quality but also increases generation time.

- Use Cases: Use higher steps for high-quality images and lower steps for rapid sketch generation.

Guidance Scale

- Controls how closely the model's generated output aligns with the input text prompt.

- Higher guidance scales produce results that are more accurate to the text description but might sacrifice creativity and detail variability.

- Lower guidance scales offer greater creativity but may deviate from the expected description.

- Use Cases: Use a higher guidance scale for precise scenes and lower for generating more divergent creative content.

Negative Prompt

- Used to restrict the model from generating certain undesirable elements. For instance, when creating human images, negative prompts can exclude properties like "blur" or "distortion."

- Use Cases: Enhance the accuracy of generated results by setting negative prompts to avoid specific details or undesired elements.

Seed

- Controls randomness during the generation process. Setting the same seed ensures consistent output for identical prompts, whereas different seeds produce diverse results.

- Use Cases: Fix the random seed when reproducing a specific image during parameter tuning or vary the seed for generating diverse images.

4. Sample Code

import requests

import requests

import json

import re

url = "https://xxxxxxxxxxxx.space.opencsg.com" #endpoint url

headers = {

'Content-Type': 'application/json'

}

data = {

"inputs": "your image messages",

"parameters": {}

}

response = requests.post(url=url, json=data, headers=headers, stream=True)

response.raise_for_status()